Sometime its necessary to plot the pandas Dataframe as a table in python/Jupyter notebook, for instance for an easy reference to the Data dictionary. This is made easy by using plotly.

Step 1: Install Plolty

pip install plotlyStep 2: Import Plotly

import plotly.graph_objects as goStep 3: Plot the table

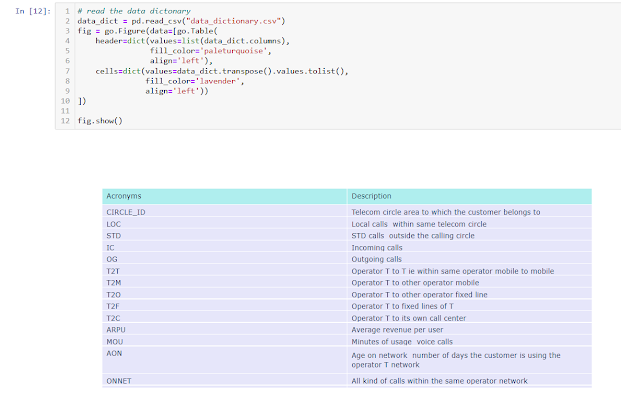

# read the data dictonarydata_dict = pd.read_csv("data_dictionary.csv")fig = go.Figure(data=[go.Table( header=dict(values=list(data_dict.columns), fill_color='paleturquoise', align='left'), cells=dict(values=data_dict.transpose().values.tolist(), fill_color='lavender', align='left'))])

fig.show()

Comments